Generative Artificial Intelligence (AI) development has witnessed significant advancements in recent years, revolutionizing various industries, including art, design, gaming, and even healthcare. Among the various facets of generative AI, the rise of generative AI image models has been particularly noteworthy. These models, driven by cutting-edge technologies like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have demonstrated the ability to generate realistic and creative images autonomously. In this article, we will delve into the world of generative AI image models and explore their impact on the broader domain of AI development.

1. Understanding Generative AI Image Models

Generative AI image models are algorithms that can create images that appear strikingly similar to those seen in the real world. The core idea behind these models is to learn patterns and features from existing images and use that knowledge to generate new, unseen images. GANs, introduced by Ian Goodfellow and his colleagues in 2014, are widely employed in this context. GANs consist of two neural networks - a generator and a discriminator - that compete against each other, resulting in the generation of highly realistic images.

2. Key Components of Generative AI Image Models

a. Generator: The generator network in GANs learns from training data and generates new images that aim to deceive the discriminator. It starts by producing random noise and progressively improves its output to resemble real images.

b. Discriminator: The discriminator, also known as the critic, is another neural network that evaluates the images it receives, classifying them as either real or fake. Its primary goal is to distinguish between real images from the training dataset and the fake images produced by the generator.

3. Variational Autoencoders (VAEs)

In contrast to GANs, Variational Autoencoders (VAEs) are another popular class of generative AI models. VAEs are primarily used for unsupervised learning of complex data, including images. These models work by encoding input images into a compact representation (latent space), from which they can be decoded back into plausible images. The latent space allows for smooth interpolation between different image features, enabling the generation of novel images through sampling.

4. Applications of Generative AI Image Models

a. Art and Design: Generative AI image models have opened up new avenues for artists and designers to explore their creativity. They can use these models to generate unique visual elements, patterns, and textures, aiding in the creation of compelling artwork and innovative designs.

b. Gaming: The gaming industry has embraced generative AI image models for generating realistic characters, landscapes, and other in-game assets. This not only reduces the burden of manual asset creation but also enhances the gaming experience for players.

c. Data Augmentation: In various machine learning tasks, having a diverse and extensive dataset is crucial. Generative AI image models can be used to augment existing datasets with synthetically generated images, thereby improving the performance of AI models.

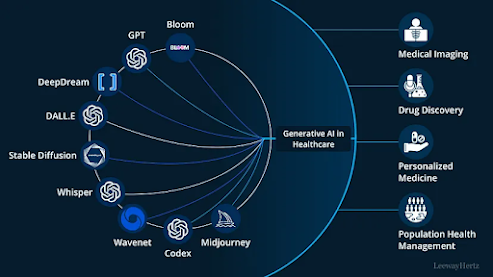

d. Medical Imaging: In healthcare, generative AI image models are employed to reconstruct medical images, enhance resolution, and even simulate various medical conditions, facilitating research and medical training.

5. Ethical Considerations

Generative AI image models have raised ethical concerns, especially in terms of generating realistic deepfakes, which can be misused for deceptive practices. Striking a balance between the creative potential and potential harm of these models remains a challenge.

Conclusion

Generative AI image models have become a driving force in the domain of generative AI development. Their ability to autonomously generate realistic and creative images has found applications across diverse industries. As technology progresses, it is essential to maintain ethical considerations and ensure the responsible use of these powerful generative AI tools. With continuous advancements in this field, the future holds exciting possibilities for the interplay between human creativity and artificial intelligence.